Part 1 – Basic Queries

To query WMI, you need to use the Get-WmiObject cmdlet. An example is below:

No special formatting, filtering, output, etc. A call to WMI like this will only query your

local machine. If you’re trying to get information from a remote computer, then

you need to use the –ComputerName flag. Be

warned, that the credentials that work for your computer might be completely

worthless on another machine. If that

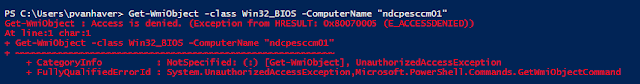

happens, you’ll see an error like this:

You have some options when this happens, you can either open

a new PowerShell window with the “RunAs” right click option and enter your

credentials at that point, or you can use the –Credential flag to enter them on the fly.

That should be enough to give you a basic understanding of

how to query WMI on your computer and remote machines.

Part 2 – Filtering Results

Sometimes when you query WMI, you’ll get a ton of results

back. Easy examples of that are the Win32_Product class (a list of everything

in Add/Remove programs), or our example, the Win32_NetworkAdapter class.

There are three typical ways of filtering WMI results. The

first is with a pipe to the where-object

command, usually aliased to where or

just ?

Here’s what the where

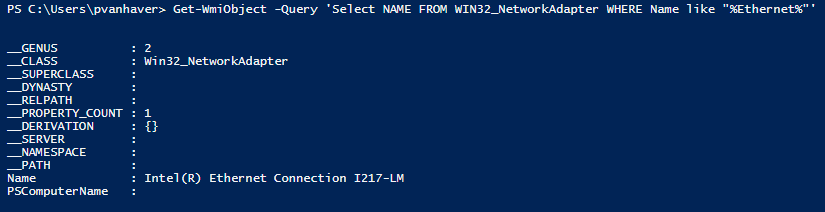

command looks like in action:

The downside to using where

to filter your results is that the first command will complete before the

filtering is performed. This means that if you’re filtering against a giant

list, that entire list has to be generated before it can even start

filtering. For things like our

Win32_NetworkAdapter class, that isn’t a big deal, but if you’re filtering a

few machines out of your entire SCCM SMS_R_Device database, it’s highly

inefficient. When you have giant

datasets to filter against, you’re usually better off filtering the list as it’s

being generated. The first example of this is the –filter flag.

Notice that when we use the where command, the filtering is done with “-like” and the “*”

symbol , while WMI filtering has no “-“ and uses “%” instead. We can also use the –query flag to construct the entire command as a WQL query

statement.

That should give you a basic idea of how to filter your WMI

search results.

Part 3 – Selecting Output

Up until now, we’ve just taken our output however WMI decided

to give it to us, which was usually List format. When looking for a lot of detail about one or

two specific results, List is a great format, but when you’re getting data

about more than a couple items, you usually want them as a Table. If you just want to select one data point

about your results, there are three main ways of doing this. The first is to

pipe your output to the select-object or

select command.

Now, we just have the Name value. You can also do your selection from within a

WQL query statement.

What you’ll notice with both of those options is that there’s

some header information that comes along with the value you wanted. Personally,

I like to use this method of calling out one specific value:

As you can see, that just returns the value we requested

with no other information coming along for the ride.

Part 4 – Formatting and Exporting

Now, we’ve done some basic formatting by only selecting

values we needed, but let’s say your SCCM architect really wants a nice report

to show off and has come to you about it. For this example, let’s say he wants a list of

all the network adapters on your computer that actually have physical

addresses. Here’s what that statement

looks like.

That gives us our dataset to work with, but it’s

unformatted. To format it in a

report-friendly way, we pipe it to the format-table

command.

Note that we can use the –property

flag to specify which values from the dataset we want to display. Now, if you

want to export this as a CSV, you can’t just pipe your format-table command to the export-csv

command. Instead, we use the select command from earlier to pick out

the values we want, and pipe that to the export-csv

command.

You’ll get no output on your screen (assuming you typed it

all in correctly), but when you open the CSV you created, you’ll see your data.

You may be wondering about the –notypeinformation flag. That’s to stop your CSV file from having

weird header information in the first row like this:

Hopefully, that gives you some basic information to start with. WMI is one of those commands that just don't have great documentation within the Get-Help command because each class has its own quirks, values, etc. Microsoft does have excellent documentation for each class if you have a basic understanding of how WMI works, so just go to Google® and type in the exact name of the class you're querying to see it.

Any further questions, just let me know!